1. Introduction to TSUBAME3.0¶

End of operation

TSUBAME3 is not in operation any more. You can find TSUBAME4 manuals here

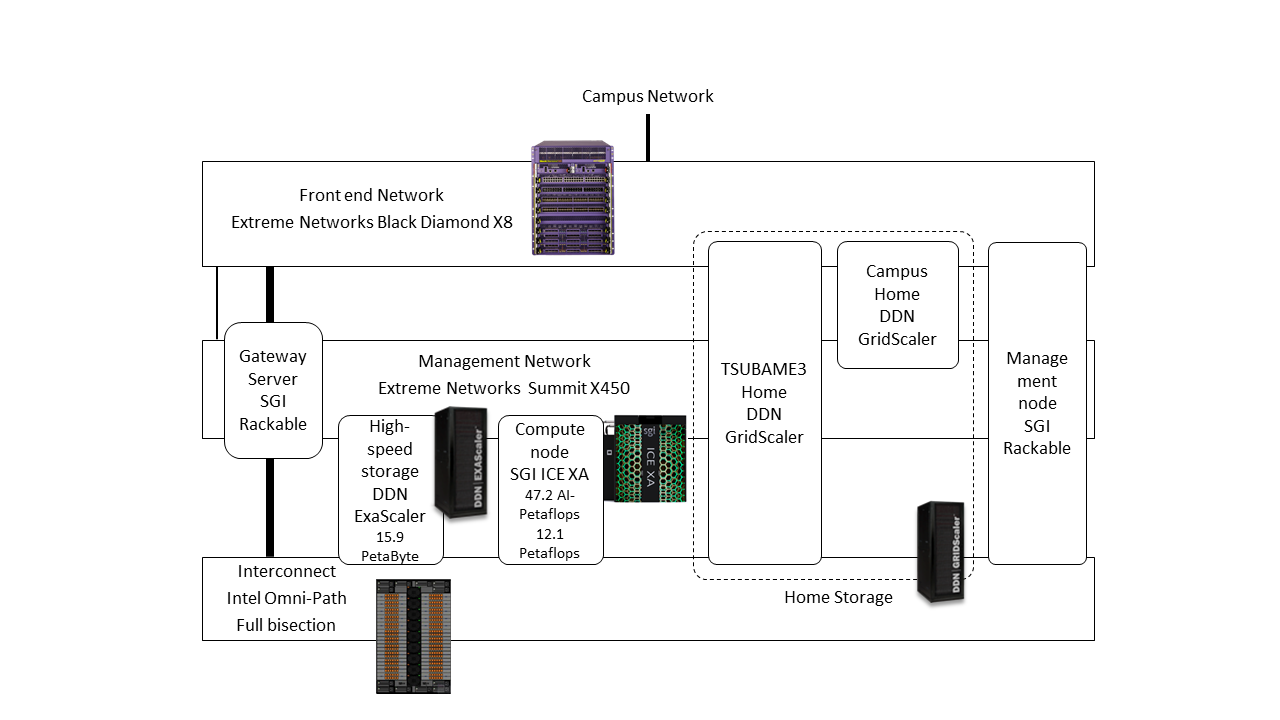

1.1. System architecture¶

This system is a shared computer that can be used from various research and development departments at Tokyo Institute of Technology.

Each compute node and storage system are connected to the high-speed network by Omni-Path and are now connected to the Internet at a speed of 20 Gbps,

and in the future, they will be connected to the Internet at a speed of 100 Gbps via SINET5 (as of May 2019).

The system architecture of TSUBAME 3.0 is shown below.

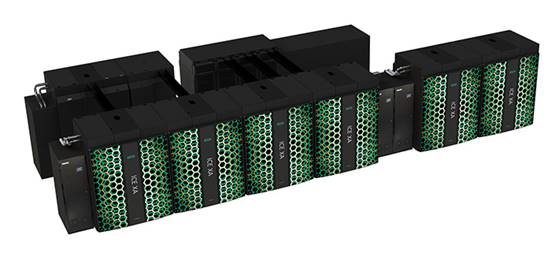

1.2. Compute node configuration¶

The computing node of this system is a blade type large scale cluster system consisting of SGI ICE XA 540 nodes.

One compute node is equipped with two Intel Xeon E5-2680 v4 (2.4 GHz, 14 core), and the total number of cores is 15,120 cores.

The main memory capacity is 256 GiB per compute node, total memory capacity is 135 TiB.

Each compute node has 4 ports of Intel Omni-Path interface and constitutes a fat tree topology by Omni-Path switch.

The basic specifications of TSUBAME 3.0 machine are as follows.

| Unit name | Compute Node x 540 |

| Configuration(per node) | |

| CPU | Intel Xeon E5-2680 v4 2.4GHz x 2CPU |

| cores/thread | 14cores / 28threads x 2CPU |

| Memory | 256GiB |

| GPU | NVIDIA TESLA P100 for NVlink-Optimized Servers x 4 |

| SSD | 2TB |

| Interconnect | Intel Omni-Path HFI 100Gbps x 4 |

1.3. Software configuration¶

The operating system (OS) of this system has the following environment.

- SUSE Linux Enterprise Server 12 SP2

Regarding the application software that can be used in this system, please refer to ISV Application, Freeware.

1.4. Storage configuration¶

This system has high speed / large capacity storage for storing various simulation results.

On the compute node, the Lustre file system is used as the high-speed storage area, and the home directory is shared by GPFS + cNFS.

In addition, 2 TB SSD is installed as a local scratch area in each compute node. A list of each file system that can be used in this system is shown below.

| Usage | Mount point | Capacity | Filesystem |

|---|---|---|---|

| Home directory Shared space for applications |

/home /apps |

40TB | GPFS+cNFS |

| Massively parallel I/O spaces 1 | /gs/hs0 | 4.8PB | Lustre |

| Massively parallel I/O spaces 2 | /gs/hs1 | 4.8PB | Lustre |

| Massively parallel I/O spaces 3 | /gs/hs2 | 4.8PB | Lustre |

| Local scratch | /scr | 1.9TB/nde | xfs(SSD) |